Hundredfold Boost in Modeling Efficiency, Revolutionizing Productivity in Gaming and Digital Twins

I. What is the Hunyuan 3D World Model?

On July 27, 2025, at the World Artificial Intelligence Conference (WAIC), Tencent officially launched and open-sourced the Hunyuan 3D World Model 1.0, the industry’s first open-source world generation model supporting immersive exploration, interaction, and simulation. As part of Tencent’s Hunyuan large-scale model family, this model aims to fundamentally transform 3D content creation.Traditional 3D scene construction requires professional teams and weeks of effort. In contrast, the Hunyuan 3D World Model can generate fully navigable, editable 3D virtual scenes in just minutes using a single text description or an image. Its core mission is to address the high barriers and low efficiency of digital content creation, meeting critical needs in fields like game development, VR experiences, and digital twins.Tencent introduced its “1+3+N” AI application framework to the public for the first time, with the Hunyuan large-scale model as the core engine and the 3D World Model as a key component of its multimodal capability matrix. Tencent Vice President Cai Guangzhong emphasized at the conference: “AI is still in its early stages. We need to push technological breakthroughs into practical applications, bringing user-friendly AI closer to users and industries.”

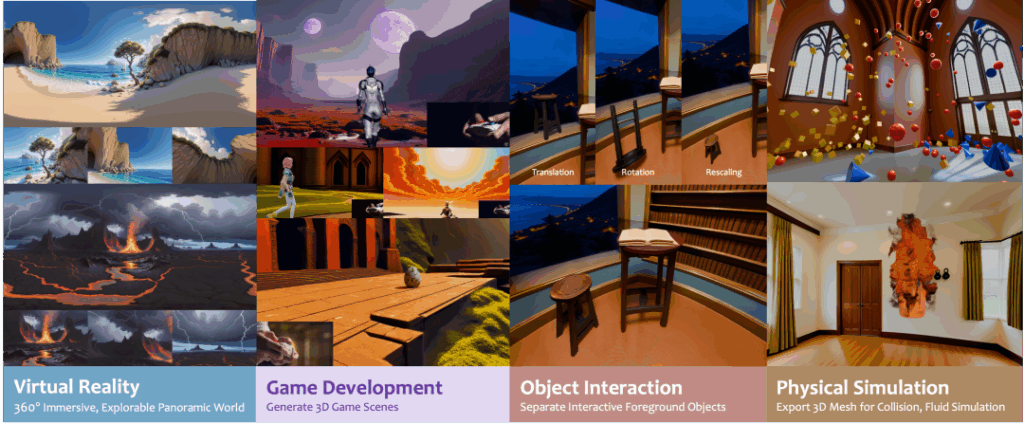

II. What Can the Hunyuan 3D World Model Do?

- Zero-Barrier 3D Scene Generation

- Text-to-World: Input “a cyberpunk city in a rainy night with glowing neon hovercar lanes,” and the model generates a complete scene with buildings, vegetation, and dynamic weather systems.

- Image-to-World: Upload a sketch or photo to create an interactive 3D space, seamlessly compatible with VR devices like Vision Pro.

- Industrial-Grade Creation Tools

- Outputs standardized Mesh assets, directly compatible with Unity, Unreal Engine, Blender, and other mainstream tools.

- Supports layered editing: independently adjust foreground objects, swap sky backgrounds, or modify material textures.

- Built-in physics simulation engine automatically generates dynamic effects like raindrop collisions and light reflections.

- Revolutionary Efficiency Gains

- Game scene creation reduced from 3 weeks to a 30-minute draft plus a few hours of fine-tuning.

- Modeling labor costs cut by over 60%, enabling small teams to rapidly prototype ideas.

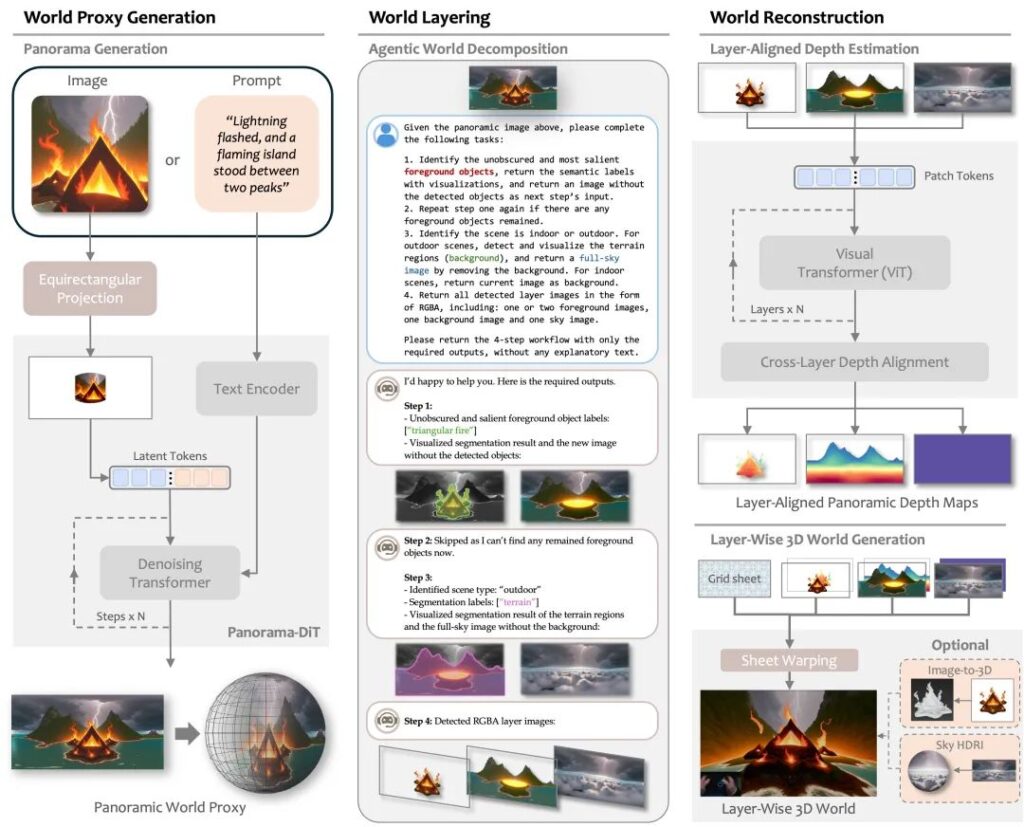

III. Technical Principles of the Hunyuan 3D World Model

The model’s breakthrough lies in its “semantic hierarchical 3D scene representation and generation algorithm”:

- Intelligent Scene Decomposition

Complex 3D worlds are broken down into semantic layers (e.g., sky/ground, buildings/vegetation, static/dynamic elements), enabling separate generation and recombination of elements. This layered approach ensures precise understanding of complex instructions like “a medieval castle with a flowing moat.” - Dual-Modality Driven

- Text-to-World: Multimodal alignment technology maps text descriptions to structured 3D spatial parameters.

- Image-to-World: Uses panoramic visual generation and layered 3D reconstruction to infer depth from 2D images.

- Physics-Aware Integration

While generating geometric models, the algorithm automatically assigns physical properties (e.g., gravity coefficients, material elasticity), making scenes not only viewable but also physically interactive.

Compared to traditional 3D generation models, this technology ranks first in Chinese-language understanding and scene restoration on the LMArena Vision leaderboard, with aesthetic quality surpassing mainstream open-source models by over 30%.

IV. Application Scenarios

- Game Industry Transformation

- Rapid Prototyping: Generate base scenes, allowing developers to focus on core gameplay mechanics.

- Dynamic Level Generation: Create new maps in real-time based on player behavior, such as random dungeons in RPGs.

- Digital Twin Applications

- Factory Simulation: Upload production line photos to generate virtual factories for testing robot path planning.

- Architectural Visualization: Convert CAD drawings into navigable showrooms with real-time material adjustments.

- Inclusive Creation Ecosystem

- Education: Students can generate 3D battlefields from history textbooks for immersive strategy learning.

- Personal Creation: Parents can turn children’s doodles into interactive fairy-tale worlds, building family-exclusive metaverses.

- Robot Training

- Integrated with Tencent’s Tairos embodied intelligence platform, generated scenes train service robots for household tasks.

V. Demo ExamplesOfficial Showcases:

- Futuristic City Generation: Input “a neon-lit floating city after rain,” creating a 3D streetscape with holographic billboards, flying cars, and dynamic rain reflections.

- Natural Scene Creation: Upload a forest photo to generate an explorable 3D jungle, where users can remove trees, add tents, and modify layouts in real-time.

Industry Test Results:

- A game studio used the prompt “fantasy elf village” to generate a base scene, adjusted architectural styles, and reduced development time by 70%.

VI. Conclusion

The open-sourcing of the Hunyuan 3D World Model marks a shift in 3D content creation from professional studios to the masses. When a single spoken phrase can generate an interactive virtual world, the boundaries of digital creation are shattered. Tencent’s move not only equips developers with powerful tools but also builds the 3D content infrastructure for the AI era—much like Android reshaped the mobile ecosystem, 3D generation technology is now a cornerstone for the metaverse.With the upcoming open-source release of lightweight 0.5B-7B models for edge devices by month’s end, this technology will reach phones and XR glasses. As creation barriers vanish, anyone can become a dream-weaver of virtual worlds, ushering in a new era of digital productivity.

Leave a Reply